Iar Workbench 8051 Crack

As an embedded design consultant, the diverse collection of projects on my desk need an equally-diverse collection of microcontroller architectures that have the performance, peripheral selection, and power numbers to be the backbone of successful projects. At the same time, we all have our go-to chips — those parts that linger in our toolkit after being picked up in school, through forum posts, or from previous projects.

In 2017, we saw several new MCUs hit the market, as well as general trends continuing in the industry: the migration to open-source, cross-platform development environments and toolchains; new code-generator tools that integrate seamlessly (or not so seamlessly) into IDEs; and, most notably, the continued invasion of ARM Cortex-M0+ parts into the 8-bit space. I wanted to take a quick pulse of the industry to see where everything is — and what I’ve been missing while backed into my corner of DigiKey’s web site. It’s time for a good ol’ microcontroller shoot-out. The Rules While some projects that come across my desk are complex enough to require a hundreds-of-MHz microcontroller with all the bells and whistles, it’s amazing how many projects work great using nothing more than a $1 chip — so this is the only rule I established for this microcontroller review. 1 To get technical: I purchased several different MCUs — all less than a $1 — from a wide variety of brands and distributors. I’m sure people will chime in and either claim that a part is more than a dollar, or that I should have used another part which can be had for less than a dollar.

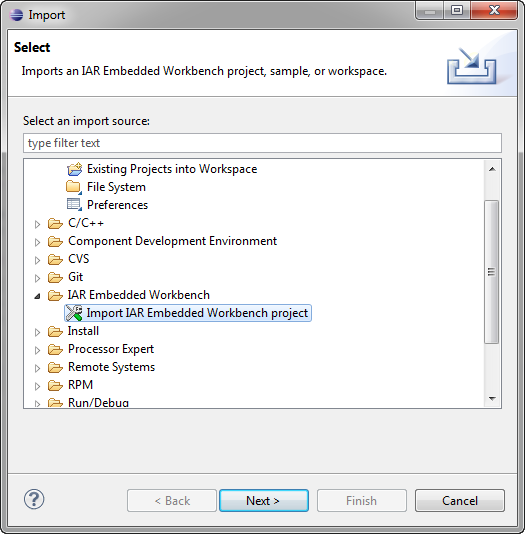

Smaller, faster, smarter code. Make sure your code is the best it can be. Our compiler makes it fast, effective and highly compact. And powerful code analysis ensures its quality. The compiler and the debugger of IAR Embedded Workbench are by many considered the best in the industry. Nov 15, 2016. IAR Embedded Workbench for 8051 version 9.30.3 310.9 mb IAR Systems is proud to announce that the latest version of the complete C/C++ compiler and.

I used a price-break of 100 units when determining pricing, and I looked at typical, general suppliers I personally use when shopping for parts — I avoided eBay/AliExpress/Taobao unless they were the only source for the parts, which is common for devices most popular in China and Taiwan. I wanted to explore the $1 pricing zone specifically because it’s the least amount of money you can spend on an MCU that’s still general-purpose enough to be widely useful in a diverse array of projects. Any cheaper, and you end up with 6- or 8-pin parts with only a few dozen bytes of RAM, no ADC, nor any peripherals other than a single timer and some GPIO. Any more expensive, and the field completely opens up to an overwhelming number of parts — all with heavily-specialized peripherals and connectivity options. These MCUs were selected to represent their entire families — or sub-families, depending on the architecture — and in my analysis, I’ll offer some information about the family as a whole. If you want to scroll down and find out who the winner is, don’t bother — there’s really no sense in trying to declare the “king of $1 MCUs” as everyone knows the best microcontroller is the one that best matches your application needs.

I mean, everyone knows the best microcontroller is the one you already know how to use. No, wait — the best microcontroller is definitely the one that is easiest to prototype with. Or maybe that has the lowest impact on BOM pricing? I can’t even decide on the criteria for the best microcontroller — let alone crown a winner. What I will do, however, is offer a ton of different recommendations for different users at the end.

The Criteria This microcontroller selection guide will have both qualitative and quantitative assessments. Overall, I’ll be looking at a few different categories: Parametrics, Packaging, and Peripherals Within a particular family, what is the range of core speed? Package options?

Some microcontroller families are huge — with hundreds of different models that you can select from to find the perfect MCU for your application. Some families are much smaller, which means you’re essentially going to pay for peripherals, memory, or features you don’t need. But these have an economies-of-scale effect; if we only have to produce five different MCU models, we’ll be able to make a lot more of each of them, driving down the price. How do different MCU families end up on that spectrum? Package availability is another huge factor to consider.

A professional electronics engineer working on a wearable consumer product might be looking for a wafer-level CSP package that’s less than 2×2 mm in size. A hobbyist who is uncomfortable with surface-mount soldering may be looking for a legacy DIP package that can be used with breadboards and solder protoboards.

Different manufacturers choose packaging options carefully, so before you dive into an architecture for a project, one of the first things to consider is making sure that it’s in a package you actually want to deal with. Peripherals can vary widely from architecture to architecture.

Some MCUs have extremely powerful peripherals with multiple interrupt channels, DMA, internal clock generators, tons of power configuration control, and various clocking options. Others are incredibly simple — almost basic. Just as before, different people will be looking for different things (even for different applications). It would be a massive undertaking to go over every single peripheral on these MCUs, but I’ll focus on the ones that all MCUs have in common, and point out fine-print “gotchas” that datasheets always seem to glance over. Development Experience Any microcontroller review or selection guide should include a discussion of the overall development environment and experience.

While this is where things get subjective and opinion-oriented, I’ll attempt to present “just the facts” and let you decide what you care about. The main source of subjectivity comes from weighing these facts appropriately, which I will not attempt to do. IDEs / SDKs / Compilers: What is the manufacturer-suggested IDE for developing code on the MCU? Are there other options?

What compilers does the microcontroller support? Is the software cross-platform? How much does it cost? These are the sorts of things I’ll be exploring while evaluating the software for the MCU architecture. Platform functionality and features will vary a lot by architecture, but I’ll look at basic project management, source-code editor quality, initialization code-generation tools, run-time peripheral libraries, debugging experience, and documentation accessibility.

I’ll focus on manufacturer-provided or manufacturer-suggested IDEs and compilers (and these will be what I use to benchmark the MCU). There are more than a dozen compilers / IDEs available for many of these architectures, so I can’t reasonably review all of them. Feel free to express your contempt of my methodology in the comments section. Programmers / debuggers / emulators / dev boards: What dev boards and debuggers are available for the ecosystem?

How clunky is the debugging experience? Every company has a slightly different philosophy for development boards and debuggers, so this will be interesting to compare. Performance I’ve established three different code samples I’ll be using to benchmark the parts in this microcontroller review; I’ll be measuring quantitative parameters like benchmark speed, clock-cycle efficiency, power efficiency, and code-size efficiency. While(1) Blink For this test, I’ll toggle a pin in a while() loop. I’ll use the fastest C code possible — while also reporting if the code generator tool or peripheral libraries were able to produce efficient code.

I’ll use bitwise complement or GPIO-specific “toggle” registers if the platform supports it, otherwise, I’ll resort to a read-modify-write operation. I’ll report on which instructions were executed, and the number of cycles they took. What this tests: This gives some good intuition for how the platform works — because it is so low-level, we’ll be able to easily look at the assembly code and individual instruction timing. Since many of these parts operate above flash read speed, this will allow us to see what’s going on with the flash read accelerator (if one exists), and as a general diagnostic / debugging tool for getting the platform up and running at the proper speed. This routine will obviously also test bit manipulation performance (though this is rarely important in general-purpose projects). 64-Sample Biquad Filter This is an example of a real-world application where you often need good, real-time performance, so I thought it would be a perfect test to evaluate the raw processing power of each microcontroller.

For this test, I’ll process 16-bit signed integer data through a 400 Hz second-order high-pass filter (Transposed Direct Form II implementation, for those playing along at home), assuming a sample rate of 8 kHz. We won’t actually sample the data from an ADC — instead, we’ll process 64-element arrays of dummy input data, and record the time it takes to complete this process (by wiggling a GPIO pin run into my ). In addition to the samples-per-second measure of raw processing power, I’ll also measure power consumption, which will give us a “nanojoule-per-sample” measure; this will help you figure out how efficient a processor is. While I’ve traditionally used units for this, I ended up using a — I ripped the target processor off the board, turning it into a $30 time-domain logarithmic power meter. If you’re interested in more information, check out my review, which has the details of these excellent tools. What this tests: Memory and 16-bit math performance per microamp, essentially. The 8-bit MCUs in our round up are going to struggle with this pretty hardcore — it’ll be interesting to see just how much better the 16 and 32-bit MCUs do.

Like it or hate it, this will also evaluate a compiler’s optimization abilities since different compilers implement math routines quite differently. DMX-512 RGB light DMX-512 is a commonly-used lighting protocol for stage, club, and commercial lighting systems. Electrically, it uses RS-485; the protocol uses a long BREAK message at the beginning, followed by a “0” and then 511 bytes of data, transmitted at 250 kbaud. In this test, I’ll implement a DMX-512 receiver that directly drives a common-anode RGB LED.

I will do this with whatever peripheral library or code-generator tool the manufacturer provides (if any at all). While you should really look for a precisely-timed break message, since this is only for prototyping, I’ll detect the start-of-frame by looking for an RX framing error (or a “break” signal, as some UARTs support LIN). I’ll minimize power consumption by lowering the frequency of the CPU as much as possible, using interrupt-based UART receiver routines, and halting or sleeping the CPU. I’ll report the average power consumption (with the LED removed from the circuit, of course). To get a rough idea of the completeness and quality of the code-generator tools or peripheral libraries, I’ll report the total number of statements I had to write, as well as the flash usage. What this tests: This is a sort of holistic test that lets me get into the ecosystem and play around with the platform.

This stuff is the bread and butter of embedded programming: interrupt-based UART reception with a little bit of flair (framing error detection), multi-channel PWM configuration, and nearly-always-halted state-machine-style CPU programming. Once you have your hardware set up and you know what you’re doing (say, after you’ve implemented this on a dozen MCUs before), with a good code-gen tool or peripheral library, you should be able to program this with just a few lines of code in an hour or less — hopefully without having to hit the datasheet much at all. Test notes: I’m using to generate DMX messages through an FTDI USB-to-serial converter (the program uses the plugin to do this). As my FTDI cable is 5V, I put a 1k resistor with a 3.3V zener diode to to ground, which clamps the signal to 3.3V. The zener clamp isn’t there to protect the MCU — all of these chips tested have diode catches to protect from over-voltage — but rather, so that the MCU doesn’t inadvertently draw power from the Rx pin, which would ruin my current measurement results. Evaluation Code All code I used to evaluate these parts in this microcontroller selection guide is available on my.

Check it out, fork it, submit patches, and keep me honest — if your results differ from mine, let’s get this post updated. Specs Comparison Core Microcontrollers continue to divide into two camps — those with vendor-specific core architectures, and those who use a third-party core design.

Out of the 21 microcontrollers reviewed here, eight of them use a 32-bit ARM core, which is becoming ubiquitous in the industry — even at this price point. Three of the microcontrollers use an 8-bit 8051-compatible ISA.

The remaining ten use the vendor’s proprietary core design: six are 8-bit parts, three are 16-bit parts, and the is the sole 32-bit part that doesn’t use an ARM core. AVR The AVR core is a famous RISC design known for its clock-cycle efficiency — especially at the time it was introduced in 1997. I reviewed two microcontrollers with an AVR core — the and the. The specific AVR instruction set and timing for both parts I reviewed is known as “AVRe” — this instruction set includes a two-cycle multiply and many single-cycle operations. Note that tinyAVR parts prior to the are essentially completely different MCUs with a less-capable AVR core that has no multiplier.

The AVR core has a 16-bit instruction fetch width; most instructions are 16 bits wide; some are 32. Still, this is a RISC architecture, so the instruction set is anything but orthogonal; while there are 32 registers you can operate with, there are very few instructions for working directly with RAM; and of those 32 registers, I’d say that only 16 of them are true “general purpose” registers, as R0-R15 can’t be used with all register operations (load-immediate probably being the most important). All things said, though, AVR offered a huge performance improvement over the 12-cycle or 6-cycle 8051 processors when AVR was first introduced — and the AVR is always faster than even modern 8051 derivatives when it comes to working with large arrays of data that must be stored in extended (16-bit) RAM on the 8051. It was also designed for C compilers, too — with 32 registers available at all times, compilers can efficiently juggle around many operands concurrently; the 8051, by comparison, has four banks of eight registers that are only easily switched between within interrupt contexts (which is actually quite useful). And interrupts are one of the weak points of the AVR core: there’s only one interrupt priority, and depending on the ISR, many registers will have to be pushed to the stack and restored upon exit.

In my testing, this often added 10 PUSH instructions or more — each taking 2 cycles. Another issue with AVR is the generally slow clock speed — even the high-end XMEGA AVR parts can only run at up to 32 MHz, with both the parts reviewed here topping out at 20 MHz.

Compare that to the, of which is many varieties run at 48 MHz or higher (like the 72 MHz Laser Bee I reviewed). Even a 50%-better clock cycle efficiency doesn’t help much when the competition runs almost four times faster than the AVR. Microchip PIC16 There’s something fundamentally goofy about almost all aspects of the that make it seem, at first glance, completely bizarre that it is as popular as it is. PIC16 uses an odd-ball 14-bit-wide program memory, yet it’s an 8-bit machine.

This dramatically simplifies the core architecture: a 14-bit word can hold just enough data to specify every CPU instruction — with enough free space left in the word to address up to 128 registers or 2K of program memory (for the two jump/call routines). Microchip calls the PIC16 a RISC machine since every PIC instruction (there’s just 49 of them) is precisely one word long 2 There’s considerable debate as to the precise definition of a “RISC architecture” is, but while the PIC16 has a single-word instruction length for all instructions, the PIC16 varies greatly from most RISC parts in that it is an accumulator-based machine, and has no working registers. I’ll leave it up to you to decide. The PIC16 is often described as a 4T architecture — taking 4 clock cycles to execute a single machine instruction. This isn’t entirely true, as the PIC16 takes an additional 4 cycles to fetch that instruction. Consequently, it’s actually an 8T machine, though it implements a pipeline scheme that allows each instruction to execute in 4 cycles — except jumps, which take 8.

Since real MCUs have more than 128 bytes of registers and 2K of program memory, this PIC has a bank selection register (BSR), which is written to whenever you need to swap banks (which happens a lot). The PIC16 is a single-register machine, and that register is named W.

Everything you do will essentially be moving something into W, doing something with it, and then moving it back to somewhere. Consequently, programming it in assembly is easy, and downright fun. Because this part can store 8192 14-bit program words, Microchip will tell you this part has 14 KB of flash (close to 16 KB, right?), but users will tell you that it has 8K of program memory — 8192 words of memory — since storing an 8192-element byte array will occupy all 14 KB of its flash memory. Keep this in mind when comparing memory. I have a longer write-up of PIC16 in the.

Microchip PIC24 While the PIC10, 12, 16, and 18 are all 8-bit cores with 12-16 bit program memory, the PIC24 moves up to 16-bit data operated through 24-bit instructions (are you starting to catch onto the numbering system?) While all the PICs before it were 4T machines, the is 2T — that is, two clock cycles per instruction cycle. The PIC24 has new indirect addressing modes that allow incrementing/decrementing and register-offset addressing, has a few more other instructions, and has three — instead of two — hardware breakpoints; but otherwise, the core is very much in the spirit of the. The carries the excellent power consumption figures that the has, but many of the parts lack the clocking and oscillator options the has (and apples-to-apples, the MSP430 is lower-power).

The dsPIC versions of these parts — which add DSP-friendly instructions — are popular for motor drivers, but it’s not clear that the PIC24 has more widely been the runaway success Microchip had hoped. Microchip PIC32 While everyone was migrating their 8-bit proprietary cores to Arm, Microchip was gleefully popping out PIC parts. But in 2007, they finally decided to add a new microcontroller — the — which uses a third-party, industry-standard 32-bit core.

Instead of following everyone to the Arm ecosystem, they took a different turn: PIC32 parts use the MIPS architecture — specifically the M4K core. MIPS built this core for single-chip MCU applications. M4K has 32 registers, a 5-stage pipeline, vectored interrupts and exceptions, bit-manipulation, and 16-bit instruction encoding support.

It is not the same as an Arm processor, but at the C application level, they are similar enough that any Arm developer should have no problems (other than the usual manufacturer-to-manufacturer peripheral differences). You can program and debug the PIC32 using the same PGC/PGD/MCLR set-up you use on all other PIC parts — but there’s also support for JTAG, though the most popular JTAG debugger — the Segger J-Link — has limited support for the PIC32MX parts, and no support for the PIC32MM.

Arm Cortex-M0 The Arm Cortex-M0 3 Formerly ARM, but as of August 1, 2017, “Arm” is the capitalization style.is a 32-bit RISC architecture that serves as the entry-level Arm architecture available to silicon vendors for microcontroller applications. Arm cores are designed by and licensed to semiconductor manufacturers for integration into their products. Arm started out as a personal computer microprocessor when Advanced RISC Machines formed a joint venture between Acorn, Apple, and VLSI Technology to manufacture 32-bit processors for the Acorn computer. While Arm cores have grown in popularity as microprocessors for battery-powered systems (they are almost certainly powering your smartphone), Arm moved into the microcontroller sphere as well — the ARM7TDMI-S was probably the first Arm core that was used in microcontrollers — i.e., processors with completely self-contained RAM, flash, and peripherals. The Atmel AT91 and ST STR7 were probably the first microcontroller parts designed with an Arm core. It’s important to understand the history of Arm because it explains a serious feature of Arm microcontrollers that differs substantially from the 8051 (the other multi-vendor architecture that dominates the field): Unlike the 8051, Arm is just a core, not a complete microcontroller. The ARM7TDMI-S didn’t come with any GPIO designs, or provisions for UARTs or ADCs or timers — it was designed as a microprocessor.

Thus, as vendors started stuffing this core into their extremely high-end MCUs, they had to add in their vendor-specific peripherals to the AHB (AMBA 4 Advanced Microcontroller Bus Architecture — these multi-level acronyms are getting tediousHigh-performance Bus). Consequently, Freescale used a lot of HC08 and ColdFire peripherals; while Atmel designed new peripherals from scratch. ST borrowed a bit from the ST7 (the precursor to the ) but used new designs for timers and communications peripherals. Since many microcontroller projects spend 90% or more of the code base manipulating peripherals, this is a serious consideration when switching from one Arm MCU vendor to another: there’s absolutely zero peripheral compatibility between vendors, and even within a single vendor, their Arm parts can have wildly different peripherals. Unlike other Arm parts, the M0 series only supports a subset of the 16-bit Thumb instruction set, which allows it to be about 1/3 the size of a Cortex-M3 core. Still, there’s a full 32-bit ALU, with a 32-bit hardware multiplier supporting a 32-bit result.

Arm provides the option of either a single-cycle multiply, or a 32-cycle multiply instruction, but in my browsing, it seems as though most vendors use the single-cycle multiply option. In addition to the normal CPU registers, Arm cores have 13 general-purpose working registers,. The core has a nested vector interrupt controller, with up to 32 interrupt vectors and 4 interrupt priorities — plenty when compared to the 8-bit competition, but a far cry from the 240 interrupts at 256 interrupt priorities that the larger Arm parts support. The core also has full support for runtime exceptions, which isn’t a feature found on 8-bit architectures. The M0+ is an improved version of the M0 that supports faster two-cycle branches (due to the pipeline going from three-stage to two-stage), and lower power consumption. There are a slew of silicon options that vendors can choose from: single-cycle GPIO, support for a simple instruction trace buffer called Micro Trace Buffer (MTB), vector table relocation, and a rudimentary memory protection unit (MPU). One of the biggest problems with ARM microcontrollers is their low code density for anything other than 16- and 32-bit math — even those that use the 16-bit Thumb instruction set. This means normal microcontroller type routines — shoving bytes out a communication port, wiggling bits around, performing software ADC conversions, and updating timers — can take a lot of code space on these parts.

Exacerbating this problem is the peripherals, which tend to be more complex — I mean “flexible” — than 8-bit parts, often necessitating run-time peripheral libraries and tons of register manipulation. Another problem with ARM processors is the severe 12-cycle interrupt latency. When coupled with the large number of registers that are saved and restored in the prologue and epilogue of the ISR handlers, these cycles start to add up. ISR latency is one area where a 16 MHz 8-bit part can easily beat a 72 MHz 32-bit Arm microcontroller. 8051 The 8051 was originally an Intel microcontroller introduced in 1980 as one of the first widely-deployed 8-bit parts. The 8-bit modified Harvard core has a fully-orthogonal variable-length CISC instruction set, hardware multiplier and hardware divider, bit-addressable RAM and specific bit-manipulation instructions, four switchable banks of eight registers each, two-priority interrupt controller with automatic register bank-switching, 64 KB of both program and extended RAM addressability, with 128 bytes of “scratch pad” RAM accessible with fast instructions. The 8051 was actually a specific part — not a family — but its name is now synonymous with the core architecture, peripherals, and even package pin-out 5 the 8051 is a member of a family officially called the “MCS-51” — along with the 8031, 8032, 8051, and 8052 — plus all the subsequent versions that were introduced later.

The original had 4K of ROM 6 Expensive windows ceramic packages allowed for developers, but production units were mask-ROM or OTP — or, in the case of the 8031, only external ROM was supported., 128 bytes of RAM, four full 8-bit GPIO ports (32 I/O total), a UART, two or three timers, and a two-priority interrupt system. The 8051 has a fully orthogonal CISC instruction set, which means you can do nearly any operation with immediate, direct, or indirect operands, and you can do these operations in RAM, registers, or the A accumulator. Many vendors built direct, drop-in compatible clones of the 8051, and by the time Intel discontinued its MCS-51 products in 2007, barely anyone noticed — these third-party parts evolved from mimicking Intel functionality to outright beating them with tons of additional timers, peripherals, and special-purpose functionality. Because of its small core and fast interrupt architecture, the 8051 architecture is extremely popular for managing peripherals used in real-time high-bandwidth systems, such as USB web cameras and audio DSPs, and is commonly deployed as a house-keeping processor in FPGAs used in audio/video processing and DSP work.

Old-timers associate the 8051 with “old and slow” because the original was a 12T microcontroller — each machine cycle took 12 clock cycles to complete. Since there was no pipelining, every instruction byte took a machine cycle to fetch, plus one or more additional machine cycles to execute — altogether, it could take more than 50 clock cycles to execute a given instruction.

No need to worry about that anymore, though: all the modern 8051-style MCUs available are 1T processors, and many of them have a pipelined core, meaning many instructions take the same number of clock cycles to execute as the instruction’s length. Consequently, in many 8051 implementations, operations in A (the accumulator) are the fastest, followed by the register bank, and then RAM. I ended up with three different 8051-compatible microcontrollers end the lineup: the, the, and the. From a pure core design standpoint, the is probably the most interesting — while I can’t find documentation to confirm this, it appears that STCmicro uses a 24-bit (or more) parallel instruction-fetch size.

This means that a huge number — more than 80% — of instructions can be executed in a single cycle, which is a substantial step up from all other 8051s on the market these days. However, the, from Silicon Labs’ C8051 lineage, can hit much higher clock speeds — topping out at 72 MHz — making it the fastest part I reviewed, by core speed. I’ll be looking more at this in the performance section of the review. STM8 The core has six CPU registers: a single accumulator, two index registers, a 24-bit program counter, a 16-bit stack pointer, and a condition register.

The STM8 has a Harvard architecture, but uses a unified address space. There’s a 32-bit-wide program memory bus which can fetch most instructions in a single cycle — and pipelined fetch/decode/execute operations permit many instructions to execute in a single cycle. The claim to fame of the core is its comprehensive list of 20 addressing modes, including indexed indirect addressing and stack-pointer-relative modes. There’s three “reaches” for addressing — short (one-byte), long (two-byte), and extended (three-byte) — trading off memory area with performance. This is the only architecture in this round-up that has this level of granularity — all the other chips are either RISC-style processors that have lots of general-purpose registers they do their work in, or 8051-style CISC parts that manipulate RAM directly — but pay a severe penalty when hitting 16-bit address space. The STM8 manages these trade-offs in an efficient manner. The chart above illustrates the differences in core clock speed among each MCU.

As will be seen in the evaluation section, core clock speed is not a good predictor of performance when comparing between different MCU families (especially between 8-, 16-, and 32-bit parts). However, most MCUs limit the maximum peripheral clock rate to that of the CPU, which may be a driving factor if your application requires fast peripheral clocks (say, for fast GPIO bit-banging or for high-speed capture/compare timer operations). The Infineon XMC1100 is a neat exception to this rule — its peripheral clock can run at up to 64 MHz. There are other important asterisks to this data: the Atmel tinyAVR and megaAVR parts have severely limited operating ranges when running below 5V, which will affect most modern designs.

The tinyAVR can only run at 10 MHz below 3.6V, and at 5 MHz below 2.2V. The megaAVR has the same speed grades, but even worse, has nothing faster than an 8 MHz internal oscillator. When talking about sub-$1 MCUs, adding a crystal or even low-cost ceramic resonator adds a sizable portion of the cost of the MCU to the BOM. The Silicon Labs EFM8 Laser Bee, with its 72 MHz core clock speed, beats out even the ARM microcontrollers in this round-up. The Sanyo LC87 brings in a 12 MHz reading — but bear in mind this is a 3T architecture, which limits the actual instruction clock speed to 4 MHz. The Holtek HT66 and Microchip PIC16 are both 4T architectures, but the PIC16 has a relatively snappy 32 MHz core speed (thanks to its on-board PLL), which allows it to compete better with 8 MHz parts. Here, we consider flash capacity in terms of bytes — but be aware that flash usage varies considerably by core.

The PIC16 may have 14 KB of flash, but being a 14-bit-wide core, it will feel more like it has 8 KB of flash when it comes time to actually storing and manipulating data, and counting instructions. The Holtek HT66 is similar to the PIC16, but with a 16-bit-wide fetch.

The same goes for the PIC24, which has a 24-bit-wide flash data path. While other cores may have a wider-than-a-byte fetch size, they still allow instructions and data to be packed into flash efficiently. The STC8’s insane equipment list starts to shine — with 64 KB of flash, this part should be able to do essentially anything you throw at its space-efficient 8051 core. On the other side of the spectrum, the ARM processors were especially stingy with flash capacity, which is important to consider for applications that rely on a lot of peripheral runtime libraries: as we’ll see in the performance evaluation, many of these parts had little room left over after the test code was programmed onto them. The Microchip PIC24’s 4 KB flash capacity makes the part essentially unusable for general-purpose applications that would usually target a 16-bit controller, as it can only hold 1408 instructions in flash (due to its 24-bit-wide word fetches). Infineon’s memory configuration is actually “backwards” — it has 8 KB of flash, and 16 KB of RAM. It, so performance-critical code should be moved to RAM for fast execution.

While you’re at it, unless you’ve got some large data capturing/analyzing procedures, you could just, too. The KE04 (and probably the PIC24) both have too little RAM for most projects that would target these architectures. I’m actually less worried about the Holtek HT66; it’s efficient peripheral library essentially uses no RAM, and 256 bytes of user data is plenty of space for an ultra-low-power MCU that’s designed for only the most basic duties. Each MCU’s review page discusses its complement of timers, but I wanted to come up with a score to help quickly compare timer peripherals across MCUs — I call it TimerMark.

The chart above illustrates the total number of communications resources each microcontroller has. Most vendors use a separate, dedicated module for UART, SPI, and I 2C communication.

Went a step further and doubled up everything — two UARTs, two SPI, and two I 2C. Except under heavily-loaded buses, or under extremely strange designs, there’s rarely a need for multiple SPI or I 2C buses, so this decision struck me as a bit odd. ‘s decision to incorporate four separate UARTs in addition to an SPI and an I 2C module seems to be more useful, since UARTs are almost always dedicated to a particular peripheral. Doubling-up on UARTs was a popular decision overall: the other 8051s — the and the — bring a second UART, as do the and, along with the. I also like the flexibility that,,, and have — they use arrayed “serial units,” each can morph into a UART, SPI, or I 2C peripheral. Cypress and Infineon have two; Atmel and Renesas have three (plus a fourth I 2C-slave-only interface for Renesas).

The odd ones out were the, the, and the: none of these MCUs have an I 2C peripheral — a curious omission in an era of SMBus-complaint digital sensors everywhere. And unlike the 8051’s quasi-bidirectional GPIO configuration, none of these MCUs are well-suited to bit-banging I 2C (though it’s obviously possible by switching data-direction). All the reviewed parts had an ADC except the PSoC 4000S, LPC811, and the Microchip PIC24 (though these peripherals are available on more expensive members of this family). Most of the 8 and 16-bit parts had 10-bit ADCs, while the 32-bit parts had 12-bit resolution. Some 8-bit vendors offered 12-bit (Holtek HT66, STCmicro STC8), but the stand-out is the Silicon Labs EFM8, which has a 14-bit ADC — the highest resolution in our round up — and also has 20 channels, tying the tinyAVR and Sanyo LC87. The PIC16 brought in the highest channel count — it has 24 total inputs and can sample at 470 ksps.

For speed, the EFM8 brings 900 ksps to the table — beat out only by ST and Infineon whose ADCs can clock at up to 1 Msps. Other vendors turned in results hovering in the 500-800 ksps range. The Atmel ADCs were the slowest, with the megaAVR’s sluggish 15 ksps (no “0” missing) far below everyone else. Even their 32-bit ARM only turned in a 350 ksps reading — the slowest ARM part tested. DAC In this price range, it is unusual to find parts with DACs, but in this microcontroller review, five parts had this functionality. The has a two-channel 12-bit DAC — the highest resolution in my round-up.

The has a single-channel 10-bit DAC, while the part has three channels with 8-bit resolution. The has a basic, 5-bit DAC (oddly, with two separate outputs — even though they’re generated from the same DAC). The also had a basic 6-bit DAC that is pumped directly into a comparator (where these low-speed, low-resolution DACs are usually used).

Parametric Reach One of the major themes of this microcontroller selection guide is to show how easy it can be to get going with different parts, and comfortably jump around among ecosystems — picking the best part for the job. But for casual hobbyists who may live far away from major distributors, and professionals who have to meet tight timelines, sometimes there’s no time to play around with new architectures. If you want to commit to a single architecture, it’s important to know which one gives you the most headroom to move up. I created a fictious “times better” score by comparing the the part tested with the best part available in the same ecosystem — this usually means fairly comparable peripheral programming, along with identical development tools. I multiplied the core speed, package size, flash, and RAM capacities together, ratioed the two parts, and then took the quartic root. Essentially, if every parameter is double, it is considered “2.0 x” as powerful. Surprisingly, the came out on top: there are four PIC32 families — the PIC32MX was the first; it’s the mainstream core that runs up to 120 MHz, with up to 512 KB of flash and 128 KB of RAM.

But it’s the newer PIC32MZ that reaches even higher: up to 252 MHz, with 2 MB of flash, and — with the DA version of the part — includes 32 MB of onboard DDR2 memory. With the MZ-DA, you can build complex graphical apps without needing an application processor running Linux (and the PCB / BSP complexity that arrives with that). It’s essentially the PIC32 version of the Arm Cortex-M7.

Next up, the line. The STM32F0 has a famous big brother — the STM32F4 — that’s one of the most capable Arm Cortex parts ever built. Several versions run up to 180 MHz, with 2 MB of flash and up to 364 KB of RAM (in the case of the STM32F469). But the brand-new STM32F7 — part of the new Cortex-M7 line of parts — goes even further, with 216 MHz maximum operating frequency, 2 MB of flash, and 512 KB of RAM.

The — one of the lower-performing parts in my round-up — has several big sisters, including the LPC546xx series, a giant Cortex-M4 with 220 MHz max frequency, 512 KB of flash, 200 KB of RAM, in up to 208-pin packages. The in this review has very little headroom — these devices top out at 16 KB of flash, 2 KB of RAM, 20 MHz, and 24-pin QFN packages; however, 32 KB tinyAVR parts are soon to be released. The has a bit of reach in pinning and memory. The ATmega3290 keeps the 20 MHz clock speed but bumps up the pin-count to 100 pins.

There are many megaAVR parts with 64 KB of flash and 4 K of RAM, as this part has. Some megaAVR parts have as much as 16 KB of RAM or 256 KB of flash. Oddly, Atmel can’t seem to combine these specs — there is no 100-pin, 256 KB flash, 16 KB RAM megaAVR that is in current production. The extends up to the SAM D21, which maintains its 48 MHz clock speed, but increases flash up to 256 KB, with up to 32 K of RAM, and sizable 64-pin package options.

There’s a lot of headroom left in the SAM Arm microcontroller ecosystem, but if you leave the D1x/D2x line of parts, you’ll lose familiarity with some of the peripherals (especially the communications interfaces). Having said that, the Arm Cortex-M7-based ATSAMS70 will get you up to 300 MHz of performance, 2 MB of flash, and 384 KB of RAM, in up to 144-pin packages.

The older A revision parts are deeply discounted, including this 64-pin 1 MB flash part for $5.14 on DigiKey. With the, you’re at rock-bottom in the PSoC ecosystem, so the only direction is up — the PSoC 5 devices run at 80 MHz, with up to 256 KB of flash and 64 KB of RAM in 100-pin packages. These parts come with all the PSOC goodness that Cypress users love — reconfigurable digital logic, lots of analog features, and excellent capacitive-touch sensing.

The just-around-the-corner PSoC 6 promises to bring even more performance to the ecosystem, with the PSoC 63 running a 150 MHz Arm Cortex-M4F, 1 MB of flash, 288 KB of RAM, integrated BTLE connectivity, and 100+ pin packages. The Freescale and are both entry-level devices in the E and L families within the Kinetis system. The E family has good reach up to the 168 MHz KE1x, with up to 512 KB of flash, 64 KB of RAM, and up to 100-pin packaging. Unfortunately, this is a fairly different process than the KE04 reviewed here — there’s no Processor Expert support, and the communications peripherals are quite different than those in the lower-end part. It retains its 5V operating range, which segments it into the E family. The KL03 extends up to the KL28 — a 72 MHz Cortex-M0+ with 512 KB of flash, 128 KB of RAM, and up to 121-pin BGA packages available. Unlike the E series, the KL series has much more uniform peripherals across its range of devices.

The has no real latitude above the HT66F0185 — but as mentioned on the review page, there are tons of application-specific products Holtek makes that use this core. The Infineon family extends up to the XMC1400, with 200 KB of flash, 16 K of RAM, a slightly-faster 48 MHz core clock, and a 64-pin package. Moving out of the XMC1000 ecosystem, the XMC4000 keeps the XMC1000 peripherals and swaps out the core for a Cortex-M4F, running up to 144 MHz, with 2 MB of flash, 352 KB of RAM, and up to 196-pin packaging options. The tops out in the 64-pin PIC16F19197 device, with 56 KB of flash (well, 32 K words, because PIC), 4 K of RAM. I’ll include PIC18 devices, though, as they’re targetted with the same compiler, programmed with the same debugger, and share peripherals and architectural decisions.

PIC18 devices can reach up to the PIC18F97J60 — a 100-pin beast with 128 KB of flash (64 K words), and almost 4K of RAM. While most of these 8-bit parts have similar peripherals across the board, I must note the Ethernet MAC and PHY present in the PIC18F97J60. While many higher-end microcontrollers have an Ethernet MAC, this low-end PIC18 part is one of the only microcontrollers — at any price — to also integrate a PHY 7 The only other mainstream MCU that has an integrated Ethernet PHY is the $14 Tiva-C TM4C129x, a giant 128-pin 120 MHz Arm Cortex-M4 from Texas Instruments. There are a few other (albeit odd) choices out there: Freescale’s legacy ColdFire microcontrollers include the MCF5223X, which has an integrated Ethernet PHY. Fabless designer ASIX manufacturers the AX11015, a 100 MHz 8051 with an integrated Ethernet PHY. The PIC24FJ1024GA610 supports up to a 1024 KB of flash, 32 KB of RAM, and a 32 MHz run speed — though there are dsPIC devices, like the dsPIC33EP512GM604, with up to 140 MHz operating frequency, 512 KB of flash, and 48 KB of RAM.

The can look up to the 40 MHz W79E658A, with 128 KB of flash, 100-pin packaging, and 1.25K of RAM. The has a few Cortex-M4 big sisters: the M505 has 2 MB of embedded SPI flash (sounds slow to me), and 128 KB of RAM, runs at 100 MHz, and comes in a 48-pin package. Nuvoton hasn’t released the M487 yet, but it promises a 192 MHz CPU with 512 KB of flash, 160 KB of RAM, and at least 80-pin package options (though Nuvoton hasn’t unveiled all details yet). Extends up to the R5F101SLAFB, with 128-pin package, 512 KB of flash, 32 KB of RAM, and a slightly-faster 32 MHz clock speed.

Sanyo’s LC87 extend up to 100-pin 256 KB parts with 12 KB of RAM, and an 18 MHz clock with the LC87F7NP6AUE. The is the top-of-the-line part in the EFM8 family — these top out at 64 KB of flash, 4.25 KB of RAM, and a relatively-small 32-pin package. Having said that, you can stay in the Silicon Labs 8051 family with the C8051F120 — a 100 MHz 8051 with 128 KB of flash, 8.25 KB of RAM, and a 100-pin microcontroller. It’s an older part, but it’s still supported in Simplicity Studio (though not in Simplicity Configurator). The I tested is the top-of-the-line part in their catalog. The extends up to the MSP430F6779, with 512 KB of flash, 32 KB of RAM, and 128-pin packaging.

Eclipse-based development environments, such as Code Composer Studio from Texas Instruments, provide a complete text editor, toolchain, and debugging system in one application — plus many vendors choose to extend Eclipse with vendor-specific features, such as the ULP Advisor that Texas Instruments bundles to help developers get active- and sleep-mode current down to the minimum. Eclipse Eclipse is a Java-based IDE originally developed at IBM to develop in Java. But since 2001, it has been an open-source project built by the Eclipse Foundation. Provides C/C++ tooling, and has taken off like wildfire in the embedded world — starting in the 32-bit ARM ecosystems, but migrating down to 16- and 8-bit parts as well. In fact, almost all the major microcontrollers here are programmed in an IDE based on Eclipse: • uses • uses (or ) • uses • uses • uses • uses • uses • uses • uses Other than DAVE, CooCox, and e 2 studio (which all only run on Windows), all of these toolchains have cross-platform support for Windows, macOS, and Linux. Recent versions of Eclipse, like the 4.6.3 Neon release that System Workbench for STM32 uses, provide good dark theme support, as well as the incredibly snappy pop-up function browser that shows you the full source code of any function you hover over. Main features of Eclipse CDT include multi-project workspaces, debugging with tons of introspection windows, support for different toolchains, plus a syntax-highlighting text editor with content assist text completion, macro definition browsing and highlighting, code snippets, and tons of refactoring capabilities.

The code editor in Eclipse is definitely a stand-out among IDEs tested — especially if you’re coming from other IDEs. Everything is completely customizable, very snappy, and full of features. The pop-up “Source Hover” is one of my favorite features: hover over a function, and the source of the function (including any docs) will pop-up immediately. If you’re still not sure about something, move your mouse down into the pop-up window and it turns into a scrollable editor window, allowing you to see the entire contents of the function (and copy-and-paste from it).

One feature request: I would love to see Ctrl-Click working from within this pop-up, and it would also be amazing to see editing capability, too. Vendor Customizations The have made it trivial to set up an Eclipse-based workflow when working on many ARM processors; under the hood, some of these IDEs are basically just pre-packaged open-source components with a nice splash screen. This is definitely the case for Kinetis Design Studio, MCUXpresso, and System Workbench for STM32 (which didn’t even bother changing the Eclipse logo). There’s nothing wrong with that — it’s far less jarring to move between stock Eclipse IDEs, and if you’re short on hard drive space, you could probably install and configure plug-ins to essentially combine many of these IDEs together. On the other hand, some vendors had to go through great lengths to get an Eclipse-based environment working with their parts.

Silicon Labs had to write a debug interface from scratch that could communicate with their tools (and work with Keil C51 binaries), custom property panes for managing the build system — along with packaging a patched WINE system that can run Keil C51 seamlessly on macOS and Linux (and from my testing, they pulled it off). CoIDE has a super-simple target configuration property pane, and Keil µVision-style buttons for building and rebuilding projects. In fact, one IDE — CoIDE — is so far removed from Eclipse, I hesitated to even mention it in this list. CooCox essentially stripped Eclipse down to its base, and built up CoIDE saving little more than the Eclipse shell. What results is an IDE that is extremely easy to use — great for students and hobbyists who many find the Eclipse project properties pane to be well, a pain. This comes at the expense of flexibility, however — tons of the debugging options and windows are missing, and you can only open one project at a time.

Sometimes the IDE does thing you may not want. For example, every folder you create in a project is automatically added to the list of project source files as well as include files. I imagine this will quickly cause filename conflicts in large projects (which CoIDE doesn’t seem built for). When building out the DMX-512 demo for the RL-78, e 2 studio’s Stack Analysis tool helped me see where my stack-heavy calls were.

Vendors like Freescale decided to stop heavily customizing Eclipse with their own proprietary debugging system (which they did in ), and switch to these open-source plug-ins. I have mixed feelings about this change, as CodeWarrior seemed much faster at starting and stopping debug sessions than the GDB-based system everyone uses these days. On performance overall, recent versions of Eclipse (Mars or better) seem to be much snappier than the, so if it felt slow and bloated last time you tried it, you may want to give it another shot. Oxygen (4.7.0) was just released in June, so the newest IDEs — System Workbench, MCUXpresso, and Code Composer Studio — and are still on Neon (4.5). Other Eclipse-based IDEs are on older versions — with Kinetis Design Studio and DAVE being on the oldest release (Luna SR2 — 4.4.2). Simplicity Studio has a beautiful and functional register view that allows you to interact with registers using names and drop-down lists — this saves a lot of time when tracking down problems — no datasheet required.

Debugging in Eclipse Eclipse — across nearly all vendors — provided the best out-of-the-box experience out of all the IDEs I tested. Projects support multiple debug configurations that allow you to use different debuggers and target configurations. Out of the box, you get a source code view with interactive breakpoints, a memory browser, a disassembly view, and a list of core CPU registers. Debugging in Eclipse is relatively unified across the platforms — the biggest differences are the supported debuggers, and the custom debugging windows — especially the peripheral register view.

In my opinion, the peripheral register viewer is one of the most important debug windows — even more important than the disassembly view. My favorite register viewers are the ones used in TI’s Code Composer Studio and SiLabs’ Simplicity Studio. These have a contiguous list of all registers, organized in a tree view, with detailed descriptions of each register’s value, with combo-box selectors of all the values. Simplicity Studio edges out Code Composer Studio by naming all the constants. This is sometimes unnecessary (like: SYSCLK_DIV_12 (System clock divided by 12) ), but definitely keeps your eyes out of the datasheet for the part. Kinetis Design Studio uses — an open-source Eclipse plugin — which provides similar functionality.

This uses, a standardized set of tools for generating header files and descriptions of such. System Workbench for STM32 has a similar view, but doesn’t automatically fetch the register values.

This may not be a bad thing, as ARM microcontrollers tend to have a lot more register addresses than 8-bit parts, but it still feels a bit clunkier. MCUXpresso and Infineon DAVE have a somewhat-strange two-step process — you select the peripheral in one view, and it creates a memory rendering. Registers are broken out logically into one or more bits, but the drop-down lists don’t have named enumerations that describe what each bit-pattern does.

DAVE has exactly the same plugin as MCUXpresso, but it seems buggy — it doesn’t always work. Hopefully this gets fixed in a future version, because is severely limits the usability of the debug system. CoIDE and e 2 studio both have the worst register views — they simply display a list of the peripheral register whole values, without breaking them up logically or annotating them with text. E2 studio has real-time watches of variables; in addition to displaying variable values as a list, they can be plotted over time, or displayed in gimmicky GUI widgets that look ugly. Other features Some vendors have gone above and beyond with useful (and not-so-useful) additional views and features. E 2 studio, despite the pesky peripheral register viewer, has a useful real-time view of variables that update while debugging. I suspect the IDE is periodically breaking the MCU, reading the contents of RAM, and updating the display — but they may have a mechanism for real-time tracing in the RL-78 core.

This IDE can also show flash and RAM usage — though it’s not plotted as nicely as it is in DAVE, which shows a pie chart of all symbols. Specific to ARM parts, all Eclipse IDEs I tested support semihosting, which allows you to print characters to a console window during debugging. The characters are printed through the debugging interface, so there’s no need to configure a UART. MCUXpresso and CoIDE were the easiest Eclipse-based IDEs to configure with semihosting. MCUXpresso has nice project properties panes for selecting a C runtime library (it includes Redlib in addition to Newlib Nano) as well as linker settings.

Which of these #ifdefs are enabled? Your guess is as good as mine; Atmel Studio is Visual Studio without Microsoft’s excellent IntelliSense engine, making it worse than even Keil µVision in terms of text-editing productivity — and far inferior to the Eclipse- and NetBeans-based IDEs from competitors.

I added 6 publicly-visible global variables in this file among others in the project, and none of them appear in the auto-complete list. Atmel Studio This error started popping up recently in Atmel Studio — the only solution seems to restart my computer. It’s obviously from an old chunk of code, since it’s referring to the program as “AVR Studio.” While many vendors have transitioned to Eclipse-based IDEs, Atmel went with a Visual Studio Isolated Shell-based platform starting with AVR Studio 5. I do a ton of.NET and desktop-based C++ development, so I expected to feel right at home in Atmel Studio when I first launched it. Unfortunately, Microsoft calls this product “Visual Studio Isolated Shell” for a reason — it’s simply the shell of Visual Studio, without any of the meat. The excellent IntelliSense engine that Microsoft spent years perfecting has been replaced by a third-party “Visual Assist” plugin that struggles to identify global variables, evaluate pre-processor definitions, or perform refactoring of items defined outside of the current file.

The Toolchain editor is a near-clone of the Eclipse CDT one (no reason to reinvent the wheel), but it’s missing checkboxes and inputs for commonly-used compiler and linker options; one stunning omission is link-time optimization, which even when manually-specified as command parameters, doesn’t seem to work — odd, since Atmel is using a recent version of GCC. My biggest issue with Atmel Studio is how incredibly buggy and unstable it has been every time I’ve used it in the last two years. I’m not referring to a specific installation on a specific computer: rather, every single time I’ve installed the software, I’ve fought with AVR Dragon drivers, a bad DLL file in the installer, programmer firmware issues, or, most recently, the software popping up the “Waiting for an operation to complete” message that prevents me from debugging any Atmel product without restarting my computer. Look, I get it: embedded firmware development is a highly-specialized task, so maintaining software that works reliably for such a small user-base can be challenging. Yet, every other vendor tools tested worked nearly flawlessly.

MPLAB X is a NetBeans-based IDE that is comparable to Eclipse in terms of OS support, editor capabilities, and debugging — but it lacks some of the advanced debug configurations and introspection features that make Eclipse so powerful. MPLAB X While many vendors were moving from their proprietary Windows-only IDEs to an open-source Eclipse-based workflow, Microchip went a different route when they moved MPLAB 8 to the NetBeans-based MPLAB X in 2012. On paper, NetBeans is a lot like Eclipse — it’s a Java-based IDE that was originally built to target Java development — but has since expanded to support C/C++ (along with web-centric languages: HTML, PHP, JavaScript, etc). Like Eclipse, NetBeans is open-source, and cross-platform. Unlike Workspaces in Eclipse, NetBeans doesn’t strongly enforce this paradigm — but it does offer “Project Groups” which has similar functionality. Both have good text-completion capabilities and source introspection; both have macro expansion.

I’ve used both for years, so I feel comfortable making this subjective claim: NetBeans feels simpler; Eclipse feels more powerful. A lot of this is a result of the UX design choices — Eclipse loads the window with tons of buttons, drop-down menus, and docked panes full of features. The entire IDE’s scale is much more dense than NetBeans. Even though NetBeans has a lot of the same features, the UI is sparsely populated with the bare minimum of buttons you need to get your job done.

Even the menu bar is light on options. Instead, advanced, rarely-used features are buried away inside sub-sub menus, or — somewhat more commonly — with keyboard combinations.

As an example, I have no idea how to show the excellent Macro Expansion view in NetBeans, other than pressing Ctrl-Alt and clicking on a macro. Just to double-check, I went hunting for it in the menu bar, as well as digging through the context menus. I think students and hobbyists might be drawn toward the simplicity of NetBeans, but I prefer Eclipse’s density, as it encourages users to go exploring and discover new features.

One big omission with the NetBeans text editor is the pop-up Source Hover code explorer that Eclipse has. Hover over any function in Eclipse, and the entire source code for that function pops up in a window you can scroll through. NetBeans will display code docs for functions, but if you want to look at the content of them, you’ll have to Ctrl-Click your way into the definition. Microchip Customizations I have to applaud Microchip for heavily customizing NetBeans into MPLAB X — an IDE that really feels like it was built for embedded development. The Project Properties window is all Microchip — you can select which tool you want to use to program the device (or the integrated simulator), as well as compiler options, include paths, and tool configuration. Integrating the tool setup into individual project properties is useful for developers who switch between devices (and voltages!) a lot; but it may feel clunky to users who are always on the same device, using the same settings — every time they create a new project, they’ll have to go through the same tool configuration settings (debugger powers target, select correct voltage, blah blah blah). One goof immediately visible is the redundant “Run Project” and “Make and Program Device” buttons.

From what I can tell, these are identical (the manual says you can use either). However, from the bizarre Eclipse integrations I’ve seen, I’m used to dealing with UI oddities like this when dealing with embedded IDEs. Microchip integrates a lovely dashboard view (visible in the bottom of the main photo), which indicates the device target, the compiler, and the memory usage. The MPLAB X Options window allows you to add multiple instances of the Microchip XC compilers. As these compilers are and don’t support new devices introduced after their release, it is common for shops to have several versions of XC compilers floating around — old, paid-for versions, and new code-size-limited versions they may grab to evaluate a new part before forking over dough. I’m not a big fan of the hover-over-to-view-bit-values feature in the peripheral registers view. Debugging Debugging across all Microchip devices is much slower than in other IDEs.

I’m not sure if this a limitation of the MCU, the PicKit 3, or the IDE (or all of the above). The default behavior of MPLAB X is to reconnect from the tool whenever starting a debug session, but you can shave a few seconds off the debug load time by instructing the IDE to maintain a constant connection to the tool by ticking the appropriate box in the Options dialogue. This really ought to be the default option, as few developers know of its existence, debugging is slow enough as it is, and there are very few usage cases where you’d want the IDE to disconnect from the debugger upon completing a debug session. While there’s an option to display a Dissassembly view while debugging, this doesn’t come up by default, and even when you select it, it doesn’t seem to be “sticky” — you have to re-open the view every time you start a debug session.

This is fine for Java or desktop C/C++ development, but for embedded microcontrollers, disassembly view is critical. MPLAB X’s NetBeans backend seems confused by bit-field register definitions, which makes hover-over symbol inspection basically worthless when you’re in a debug session.

Another afterthought seems to be the peripheral register view, and peripheral register handling in general. Pull up the SFRs view, and you’ll be greeted by an extremely slow-loading window that’s painful to scroll through — especially on larger devices like the PIC32. This view displays the address of the register (who cares?), the hard-to-understand short-form name, the hex value of the register, the decimal value of the register (who cares?), the binary value (hilariously long when working on 16- and 32-bit processors), and an ASCII interpretation of the register’s value (why on Earth?) — but what’s not in this view is: a human-readable name of the register, description of the register’s function, or a human-readable decoding of the register’s current value. There’s a hover-over break-down of the register into its individual bits, but these use the same short-form datasheet names, don’t provide descriptions, and don’t contain enumeration values for multi-bit fields (or any explanation at all). Basically, you’re going to have to have your datasheet open so that you can hand-decode the values of these registers, as Microchip doesn’t seem interested in integrating that style of documentation into their IDE. These are the things that drive me nuts — it’s 2017; all of this data is already computerized.

All they need to do is add a bit more functionality to their view (which many Eclipse-based vendor tools have), and all the sudden, the SFR view becomes ten times more productive to use. For higher-end PIC32 and PIC24 devices, there’s a semihosting-like feature Microchip calls appIO (though it only works with their pricy ICD debuggers). There’s also runtime variable watch and instruction tracing, but that’s only supported by the really pricy RealICE debugger. HT-IDE3000 looks like Office 2003’s ugly cousin, but its ancient GUI toolkit snaps along on modern systems at lightning speed. Text completion is basic but rocket-fast, and the IDE integrates well with the debugger and target MCU.

Holtek HT-IDE3000 Anyone who complains about IDE bloat and download registration walls should immediately check out Holtek HT-IDE3000 (yes, that’s what it’s called)., click the download link, and a small 90 MB ZIP file starts downloading. They do make you type in the serial number on your $40 eLink debugger before using it (likely to deter debugger cloning), but that’s the only registration you’ll see. Once you get the IDE up and running, the first thing you’ll notice is its Office 2003 look. It ain’t pretty, but it’s functional.

All the menu buttons you see are completely customizable through a drag-and-drop editor, which I didn’t see in any other IDE tested. The best part about having such an old-school IDE is that this thing screams: zero-lag text-editing, immediate hover-over pop-up tooltips with function source code (like the Hover Source feature in Eclipse, but much more lightweight), and zippy project building. I did a double-take when looking at RAM usage: HT-IDE3000 uses 9.9 MB of RAM. Yes, that decimal point is placed properly. This comes at the expense of code intelligence features. IDE3000 can go to variables, macros, and function definitions; auto-complete variables and functions; and display pop-up source listings of functions when you hover over them. But there are no real macro expansion capabilities or code outline views, and the text completion is pretty basic: it recognizes C types and functions but doesn’t seem to like remembering SFRs or other types of things that get #define’d.

There’s no macro expansion, and the text completion isn’t intelligent — it’s only a selectable list of all symbols visible in the given context. This turns out to be good enough for the sorts of projects these small devices tackle. It’s not like you’re going to be juggling around more than a few dozen variables, and I’d bet 90% of the code-bases that target these parts comprise a single C file. What you do get with Holtek is deep integration with the device you’re targetting. There’s built-in functionality for interacting with EEPROM on the device, programming using USB bootloaders present in some of their chips, as well as a built-in OTP workflow for generating the appropriate files Holtek needs to manufacture your parts (since most Holtek devices sold are OTP parts).

Debugging in HT-IDE3000 is lightweight and snappy, with most features you’d expect. I wish the register view had more information about the individual bits (at least their full name and description as a hover-over tooltip), but it gets the job done — especially considering how simple the architecture is this IDE targets. As soon as you build a project, IDE3000 will jump into a debugging session in the background — even as you continue editing and building. Download Tema Blackberry Curve 3g 9300 Gratis there. This unconventional experience lures you tap that “Build” button often, and gush at your progress.

As most firmware projects targetting this microcontroller will hover in a while() loop until interacted with, while you’re editing, you can double-click on a line of code to set a breakpoint, immediately check out what’s going on, make changes, and continue editing — without the manual process of uploading code, switching to a debug perspective, waiting for the image to flash, start running, and a breakpoint hit. Code building and uploading are so fast, it almost feels like you’re in a simulator or a PC-based environment. The debugging views are basic but fully-functional. The peripheral register view will break down each register into the bits that are set and cleared — but I would have preferred hover-over descriptions of the registers and the bits they command. While Holtek could modernize the UI, you’ll get no complaints about stability from me: I didn’t have a single crash, bug, hiccup, or driver installation kerfuffle the entire time I used IDE3000 — I tried it on both Windows 7 and Windows 10 Fall Creator’s Update, and the experience was identical.

Holtek accelerates an update schedule by squashing bugs and adding new features several times a year — in fact, they had two updates over the course of my writing this review, which is more than any other IDE. STVD supports decent text completion, and customizable editor colors and fonts. ST Visual Develop (STVD) STVD is the official IDE for the. Its UI feels even older than IDE3000, but it’s a bit better when it comes to text-editing capabilities. Its code completion is invoked with the familiar ctrl-spacebar shortcut, and instead of just displaying all symbols discovered, it seems to default to symbols that make sense in the present context.

It knows enough about C to walk into pointer-referenced structs, but unfortunately, has no pop-up documentation or source-code browsing when you hover over a method. Unfortunately, it feels slow.

Autodesk Autocad 2010 Portable Free Download. Code completion can take a second or longer to pop-up in larger projects, rearranging toolbars was sluggish, going to the definition of a symbol took time to load the editor window, and menus were slow to appear. The peripheral registers view had descriptions of all the registers, but didn’t break down registers into individual bits (or explain what they were).

Debugging was also worse than average. Breakpoints can’t be set while the target is running — you must manually pause it, add your breakpoint, and then continue execution. The peripheral registers view was also underwhelming: while it has descriptions of registers, it only displays the whole value of the register — in hex format — without giving you a per-bit breakdown useful for catching bit math bugs in your code. Yet again, you’ll be resigned to manually cross-checking register values with values from the datasheet — one of my biggest pet peeves. PSoC Creator is built around Cypress’s code-gen tools, which provides a schematic capture interface for instantiating and connecting components; these tools generate the configuration bitstream along with an API for the peripherals.

PSoC Creator Cypress PSoC Creator is the official — and only — development environment available for Cypress’s line of PSoC devices, and encompasses a project management system, text editor, schematic capture tool, code generator, and debugger. PSoC Creator’s interface lurks out of the last decade with its Office 2003 costume, and its.NET codebase ensures porting it to Linux or macOS will be an arduous task that Cypress, itself, resigns as unlikely. PSoC Creator feels snappier and lighter-weight than Eclipse or NetBeans-based environments while maintaining 90% of the IDE features and code introspection abilities. Loadable workspaces weave together one or more projects — any number of which can be open at the same time — exactly as Eclipse does. A Code Explorer pane leads you to variable and function definitions with a single click; while the text competition digs deep into functions, macros, and variables to bubble up suggestions with the familiar Ctrl-Spacebar shortcut.

Cypress needs to refashion hover-over tooltips into what Eclipse or IDE3000 does; as it stands, the tooltips print the name of the function and its parameters — there are no code docs, and no quick source view you can use to peek at a function’s implementation. There’s one-click documentation access, plus a resource meter for monitoring both flash and SRAM usage — but also peripheral usage, which shows you exactly how integrated PSoC Creator is with the underlying PSoC hardware. The 500 lb gorilla in the PSoC Creator ecosystem — the schematic capture configurator tool — is a major omnipresence in PSoC Creator — but I’ll save that discussion for Code Generator section of this review page. PSoC Creator has no global register inspector like other environments; you can only view peripheral registers associated with instantiated components.

Flash load times were unimpressive (though not the worst seen in my round-up). A basic, 1.4 KB program took 7.82 seconds to load and run to main().

Filling flash up to 16 KB took 10.84 seconds. PSoC Creator jostled my brain when I strained to reduce power consumption in my DMX project.

I couldn’t find a standard list of all peripheral registers which would allow me to cross-reference bits from the datasheet — instead, you can select from the peripherals you’ve configured in the schematic capture view, which initiates a limited view of that specific peripheral (and only displaying the registers associated with its current function). As this is an ARM microcontroller, you may intuit that a cross-platform GCC-based toolchain would be easy to set up — but because of the proprietary configuration bitstream required when flashing these devices, you’ll need to use Cypress’s tools to fit your design to the processor and merge this bitstream with your application binary code. While Cypress stops short of supporting Linux or macOS outright, PSoC Creator can export projects to common cross-platform Eclipse or Makefile-based environments — as well to industry stalwarts µVision and IAR. But if you’re on Windows, I wouldn’t bother — this is as close to an Eclipse/NetBeans workflow you’ll get with a proprietary IDE, and it’s definitely good enough to compete with either.

Keil uVision Keil’s fame ensues from their acclaimed 8051 compiler, C51, introduced in 1988 — but since 1997, their compilers have also shipped with their in-house IDE, Keil µVision. Now at version 5, µVision continues to be popular among professional dev shops for both Arm and 8051 development, even as more and more manufacturers roll their own cross-platform toolchains. Speaking of that, while every Arm part I reviewed has its own manufacturer-provided IDE (save for the M051), developers can use µVision as an officially-supported development environment for all them. On the other end of the spectrum, among the 8051, only Silicon Labs maintains their own IDE for the EFM8 — both the N76 and STC8 only support µVision.

Keil’s IDE has support for multi-project workspaces, though it doesn’t enforce their use. Keil’s text editor is painful to use.

The first two things you’ll notice are that there are no auto-indenting inside block statements, and out of the box, there’s no keyboard shortcut for commenting or uncommenting code. Sure, you can bind “comment text selection” and “uncomment text selection” to shortcut keys, but you have to select the text before invoking. This is ridiculous. Even more painful: µVision has a Jekyll-and-Hyde act when switching between C51 (8051) and MDK (Arm) projects. When building Arm projects, µVision has ok-but-not-great text-completion (in the form of a pop-up list of discovered symbols) — but when building 8051 projects, that text completion inexplicably vanishes.

Keil µVision’s Arm debugging has a functional peripheral inspector that breaks each register into each logical field, with named descriptions of any enumeration values. Debugging is the same sad song.

Keil has good support for semihosting in Arm projects without needing much user intervention. A decent peripheral register explorer helps you diagnose peripheral issues with named-value fields that have nice descriptions.

Debugging on the 8051 is a different story — the pop-up peripheral inspectors are clunky, and not every vendor adds the proper support to Keil. But in 8051land, weird pop-up peripheral inspectors stand in for the peripheral pane. While these curtail my productivity, I must admit they are more amusing than their Arm brethren (let’s be honest: who doesn’t love clicking checkboxes and watching LEDs light up?). I scoured the menus, feature lists, help files, and online examples trying to redeem µVision by finding something it excelled at compared to the other IDEs I evaluated. I suppose it’s much lighter-weight than Eclipse is — coming in at 105 MB of RAM usage during a debug session.

It makes too many concessions for me to even consider it alongside Eclipse, though. In the end, consider µVision as nothing more than the free editor you get when you download C51 or MDK-ARM. And it’s not worth a penny more.

Code generation tools Peripheral configuration and bring-up is generally a drop in the bucket when compared to the time required to implement an entire commercial embedded project — but if you’re working on tiny projects (either hobbyist stuff, or simple proof-of-concept engineering demos), having a code-gen tool can noticeably speed up the development cycle. Many of the development environments tested have code-gen tools either integrated directly into the IDE (Microchip Code Configurator, Simplicity Configurator, Infineon DAVE, PSoC Creator, Processor Expert, or have stand-alone tools (STM32CubeMX, Atmel START, STC-ISP, MCUXpresso Config Tools). Some generate initialization code that calls into the vendor’s general-purpose peripheral libraries (like STM32CubeMX), while some generate raw register initialization values from scratch (Silicon Labs’ Simplicity Configurator). While Atmel START provides a nice-looking graphical interface especially useful for the flexible clocking schemes available on modern ARM microcontrollers, its design validation is hit or miss: in this picture, there’s absolutely no warning that the main 32 kHz oscillator isn’t enabled. Most seriously, the DFLL module indicates it’s outputting a frequency of 48 MHz, even though its multiplier is set to “0” in the configuration properties dialogue. Changing this to arbitrary values does not update the “48 MHz” display.

This is a tool you cannot trust. Atmel START Microchip’s code-gen tool for its Atmel acquisition is a hold-over called Atmel START; it’s a web-based code configurator that supports nearly their whole catalog of current devices. Under the hood, it generates peripheral libraries that are considered part of ASF4 (Atmel err, “Advanced” Software Framework). This is a marked improvement over ASF3 on paper, but there are still significant problems with it. First, the good: using the part with 8-bit megaAVR and tinyAVR devices is a no-brainer. You can use the “initialization” drivers instead of the “basic” drivers to generate init-only code, along with any stubbed out ISR functions you need.

The generated code is beautifully documented, extremely readable, and compact. It’s written the way you would probably write it by hand, and I can imagine this would serve as a nice learning tool for someone coming from Arduino, looking to take baby steps toward programming MCUs at the register level. Now the bad: Atmel START is extremely clunky to use. Projects must originate in the web browser, then get exported to a ZIP archive format, where Atmel Studio extracts them to a solution, where you can then open them. If you want to change something, thankfully Atmel Studio allows you to right-click on the project and reconfigure it — opening a browser window inside the app and automatically loading the project.

But this begs the question: why can’t Atmel Studio create these projects from the get-go, if it can edit and re-generate them? Another problem with Atmel START is the lack of placeholder markers the tool can use to determine what to preserve and what to overwrite — this is only done at a whole file level. I assume this is why they don’t pre-define user callback function stubs, as these would get overwritten on subsequent regenerations of the code. This is a lazy design decision that makes integrating code with Atmel START challenging. More bad news for START: Atmel’s SAM D-series parts I evaluated require lots of clock configuration (like most Arm parts), but START provides absolutely no error-checking or clocking suggestions for you. It will gladly generate code that uses the main DFLL, without actually enabling the main DFLL, causing your program to hang in a while() loop waiting for the DFLL to start up, when it never will. This is the crap that makes getting going on a new platform so challenging, and this is precisely why I like using code configuration tools.

So if a tool can’t do that, I scarcely see much value to it that I can’t get from normal peripheral libraries. Processor Expert provides a stunning array of configuration options and flexibility — but make sure you have a fast desktop PC, as this tool is a huge resource hog. It also generated some of the slowest peripheral code in the round-up. Processor Expert Processor Expert is at the other end of the spectrum. PE generates initialization code, interrupts, user callbacks, linker files, and an entire peripheral library. It uses a component-oriented model with dependency resolution; for example, two high-level “PWM” component instances will share a common “Timer Unit” low-level component (as they will end up on different output-compare channels of that timer unit). High-level components implement conceptual functionality, not peripheral functions.